In February 2020, the New Style Jobseeker’s Allowance service (JSA) was in a private beta stage, dealing with around 10% of new applications. In order to move to public beta in June, the service had to go through a Government Digital Service (GDS) assessment to make sure it met the Digital Service Standard.

Leading up to the assessment, the team faced several challenges working to the Standard including a massive increase in claims due to COVID-19, so it was rewarding to see our hard work pay off with a ‘met’ result.

I’m a user researcher on the team and this assessment was the first one I’ve been part of. We learnt a lot during the process and I’d like to share those insights.

1. Use the Service Standard as your guide from day one

Perhaps the best advice I’ve heard about preparing for a service assessment is that you should need minimal preparation if you’re working in the right way.

In the midst of a lockdown that proved to be even more difficult than usual, but our efforts to work in an agile way and use the Service Standard as a guide helped put us in a good position.

I joined the service team in late 2019 and one of my first tasks as a user researcher was to conduct an in-depth review of evidence. Another user researcher and I mapped the existing research directly to the Service Standard to gauge our confidence and identify research gaps.

We then translated that into a user research plan. The plan was ambitious at the outset, and then it ended up being compressed into a window between the pre-election period and COVID-19, both of which impacted our ability to recruit and conduct in-person user research.

When forced to make choices about what to cut out of our original plan, we kept the Service Standard in mind. Even if we couldn’t always get the depth of research we preferred, we could at least make sure our research covered all criteria.

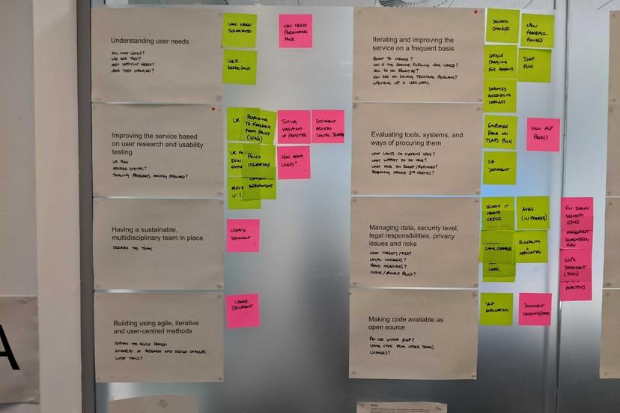

The same type of exercise happened across the wider team. We posted the Service Standard criteria on the wall in our workspace as a visible reference. Our service designer facilitated periodic review meetings to determine if we were still on target, and what actions we should take if we weren’t.

2. Document and demonstrate research and design decisions

During our private beta, we wanted to make sure that we had a robust evidence base, and that we were using it to generate and test hypotheses.

Through collaborative effort across user research, design, and analysis we made sure our iterations were tied to user needs and made measurable improvements to how successful the service is.

During the assessment we showed examples of where we had collaborated across roles to identify user needs, test designs, and measure success or failure.

We had picked up the service from another team in October 2019 and had been working quickly from this point, so we ended up having to collate the details for many of these examples shortly before the assessment.

My advice, and something we are aiming to do better as we move forward, is to keep good documentation as you go so you can keep assessment prep to a minimum. Amongst other things, it will help you avoid the trap of over-preparing.

3. Tell a story and keep it concise

The assessment took about four hours. It sounds like a long time, but in many ways it didn’t feel like nearly enough. Given the limited time, it’s worth thinking through exactly what story you want to tell. I had so much I wanted to say about our users, their needs, and the research that I probably did too much talking! Next time, I’ll be more careful about figuring out the key elements of the story and keeping it concise.

It's a balancing act. The panel will not have been closely involved in your service, so you need a narrative that will give them sufficient context but not bombard them with details.

One way we got feedback prior to the assessment was to find some people outside the team and run through our story and key points. Explaining the service to someone who wasn’t familiar with it but had some prior experience with assessments was a useful way to hone our narrative.

Due to COVID-19, our assessment was conducted remotely. For the most part, this worked well. I think attending from home helped make the situation less stressful. Plenty of breaks were scheduled, and we successfully worked through a few technical issues that cropped up.

We couldn’t point to examples on the wall like we would in person, so we prepared some slides as a substitute. This worked well in cases like showing design iterations where visuals added value, but in other cases slides didn’t add as much value as we expected and could have been left out.

I learned that if you’re going to use slides, ruthlessly edit the deck. Aim for the assessment to be more of a conversation and less of a presentation.

4. Don’t be scared

It’s natural to approach anything called an ‘assessment’ with some apprehension, but it’s important to remember that it’s not a judgement of you. Instead, it’s a chance to show the service you’ve helped to create to independent experts, tell them what you’ve learned, and have them help you confirm that you’ve identified problems before it’s too difficult or costly to fix.

GDS clearly spell out the topics that will be covered during an assessment beforehand, so I had a good idea of what some of the difficult user research questions might be. I tried to be upfront about any areas where we had wanted to conduct more or different research, and why constraints forced us to take a less conventional approach to getting the evidence we needed.

I also learned to keep in mind that service assessments set a high bar on purpose. They are an opportunity to have other people take a critical look and tell us what to improve, so regardless of the outcome, they help us make sure we’re building good services that meet our users’ needs.

Looking ahead

As the New Style JSA service moves towards live assessment, the team are continuing to face challenges like working remotely and trying to conduct user research during a pandemic. Hopefully, using the lessons we learnt from preparing for our previous assessment will help us to achieve another ‘met’ result for the service.

3 comments

Comment by Andrew posted on

I was eligible and used the new service as my own piece of user research. I found it easy to use and had the right result.

What was mind-boggling though is that the only way to turn the allowance off, after finding a job, was to call. There's a bit of me that thinks that whilst claiming efficiently is important, closing a claim is more important to stop overpayments and potentially forcing claimants into debt.

Comment by Garrett Stettler posted on

I’m glad to hear applying went well for you. Regarding closing claims, that’s something we’re aware of and are looking at going forward.

Comment by Dan posted on

Lovely stuff Garrett, we've got a number of services in AtW that will need taking through GDS and I'll be using your blog for inspiration.